|

Zen

A cross-platform functional programming language

|

|

Zen

A cross-platform functional programming language

|

Please see Getting Started prior to reading this.

Modern CPUs have architectures with many cores, from 2, to 4, to 8, 16, 32, 64, etc. While the number of cores might keep going up, the processing power of each core will hit an upper limit, if not already.

Zenlang works on the principle of true distributed computation on such architectures. Under zenlang, any computational activity is split into smaller tasks, such that each task is a small unit of computation and has all the data necessary to complete its execution.

In zenlang, greater preference is given to resource parallelism, where write-only resources are partitioned by task and read-only resources are replicated across each task.

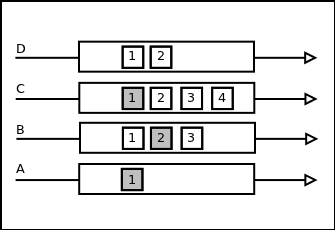

Under zenlang, each item in the runtime queue is known as a fiber. A fiber is a complex structure similar to a process stack. It consists of a stack of continuations, where each continuation is a list of closures, as shown in this diagram:

In this diagram, A, B, C and D are continuations and the numbered boxes within them are the closures. A is the first continuation to be run, where A1 would be the initial closure being run. This is typically the main() function or the unit test function.

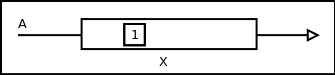

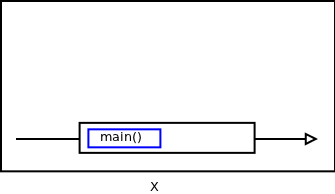

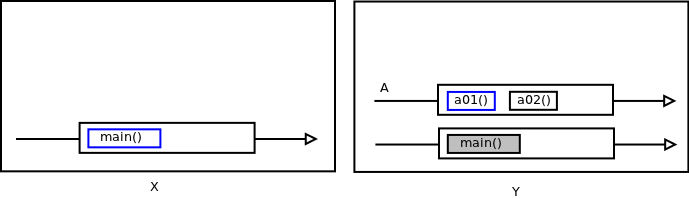

When the system first starts, it initializes the runtime queue and adds the first continuation to it. This continuation contains a single closure that invokes the main() function. The runtime queue looks like this:

Here, A1 is the closure that invokes the main() function. If the main() function does not enqueue any continuations itself, the application quits when the main() function returns.

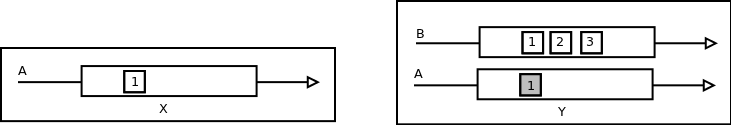

If, however, the main() function were to enqueue a continuation B before returning, for example:

int main(const list<string>& argl) { run => fn1() => fn2() => return(0); return 0; }

The run time queue will now look like this:

Here, X is the initial fiber in the queue which executed the main() function. When the main() function enqueued a continuation B, the system created a clone Y of fiber X and pushed the new continuation at the top of fiber Y.

In the code sample above, the main() function has enqueued a continuation with 3 closures:

At this stage, there are 2 fibers in the sytem, X and Y. Both these fibers are complete in themselves and can execute independent of each other, either in the same thread, or in different threads, or (in future) on different processes.

To repeat, the new fiber is an identical copy of the current one, including the functions to be called and the data stored in the closures, and when function calls return, the unwinding occurs in the same order for both. This is similar to the POSIX fork() mechanism.

In this example:

int main(const list<string>& argl) { run => fn1() => fn2() => return(0); return 0; }

There will be two distinct execution paths.

In zenlang, every continuation has a return type, as does every closure in the continuation.

To explain, lets take a look at a more complex code sample:

namespace ClosureReturnDemo; (int op) b01 () { return (3); } (int op) b02 () { return (5); } (int op) a02 (int ip) { return (ip * 7); } (int op) a01 () { run => b01() => b02(); return (9); } int main(const list<string>& argl) { unused(argl); run => a01() => a02(ca01.op); // any closure can be referred to by prefixing a 'c' to its function name return 0; }

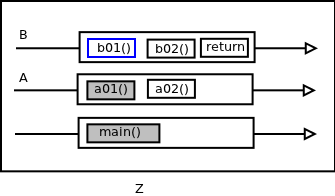

The flow of this code is quite simple.

In the images above, The closures indicated by the grey boxes are the parent closures for the continuation above. For example, if a01() enqueued continuation 'B', the a01() is the parent closure for continuation 'B'.

Remember again, X, Y and Z are 3 independent fibers in the queue. Here's a quick recap of the running sequence:

As mentioned earlier in the Getting Started guide, the return value of a closure can be passed to another closure in the same continuation.

In fiber Y, the function a01() returned the value 9 in return parameter 'op'. When this fiber is next executed, a02() is called with the parameter ca01.op, which is 9. This is fairly simple and straightforward.

However, the situation gets complicated in fiber Z. At the point in time when Z was being created, the state of Y was that the function main() was already executed, a01() is currently being executed, and a02() is yet to be executed.

The function a01() looks like this:

(int op) a01 () { run => b01() => b02(); return (9); }

When function a01() executes the run statement to enqueue a continuation 'B', a new fiber (here Z) is created as an exact replica of fiber Y, and the new continuation is pushed on the top of Z. The state of Z now is

When fiber 'z' gets executed subsequently, it runs functions b01() and b02() in that order. After that, it now needs to run function a02(). But here's the catch. When calling function a02(), it has to pass the return value of a01() as an input parameter to a02(). Sinc this fiber 'z' was created before a01() returned, at this point a01()'s return value would have been indeterminate.

Since continuation 'b' was executed as a 'subroutine' of continuation 'A', what is needed is for the return value of continuation 'B' to be placed into continuation 'A', as the return value of function a01(). To this end, we now modify the function a01() as follows:

(int op) a01 () { run => b01() => b02() => return(8); return (9); }

Fiber 'Z' now looks like this:

The return value of continuation 'B' is inserted into continuation A as the return value of a01(), which is the parent closure of continuation 'B'. When function a02() is invoked, this return value (8) is passed as the parameter.

The last closure in continuation B is called a return closure, and is used to return a value to the parent closure of the current continuation.

This execution model aims to minimize data sharing and maximize data replication. Any data that needs to be processed by another fiber is passed by value to that fiber, where a complete deep copy is created and stored.

Consider, for example, a small change to the earlier code sample:

int main(const list<string>& argl) { local v = 10; run => fn1() => fn2(v) => return(0); return 0; }

In this function, 'v' is a local variable in the main() function, and it gets passed to the second closure of the new continuation (B2). This is represented by the following diagram:

Note that the first instance of 'v' (the red dot) is a local variable in main(). It goes out of scope and its memory (on the stack) get released when the main() function returns.

The closure B2 (in fiber Y) now operates on the closure variable 'v' (the blue dot).

At a more practical level, this model can be better understood with the example of, say, a quicksort implementation.

Imagine a quicksort function that takes an array, identifies a pivot, does the swap and enqueues two fibers, each one with a copy of one part of the input array.

The quicksort function is called repeatedly with the part array, enqueuing more and more fibers until the input array size is 0 or 1.

Along with the part-array, the closure also stores the location of the part-array in the main array. This information, along with the sorted data, is now passed onwards to the next continuation, which collates all the data into the final output array.

This brings us to the topic of synchronization, but before that, a quick recap of everything we have learnt so far.

Unless the computation is what is known as "embarassingly parallel", the parallel tasks must synchronize with each other at some point in time. This is done with the usual synchronization mechanisms, such as mutexes.

(Work in progress)

The use of application-wide singletons, while discouraged, are often necessary. For example, a global data structure to hold a list of all active sessions on a web application server.

This is made possible in zenlang by creating the data structure and passing it to an exit fiber which gets executed when the application exits, and is responsible for cleaning up and deleting that data structure.

(Work in progress)